Earth Observation technology for monitoring climate change

In recent decades, the world has experienced rapid growth in Earth Observation (EO) technology, which has allowed us to gather plenty of information about planet Earth’s physical, chemical and biological systems. Be it land, sea or air, EO is the most robust technology to monitor and assess the status of, and changes in, the natural and artificial environment. Particularly relevant has become its role in rapidly assessing situations during extreme weather events or natural disasters, precisely assessing Greenhouse gas (GHG) emissions, making EO pivotal in ensuring consistent, long-term environmental assessments in face of unpredictable climate change.

But this has come at a cost: massive data volumes produced by space-derived sensors and in-situ monitoring networks per day need to be converted into meaningful information to make use of it, while critical data is often heavily under-used because it requires a high level of technical expertise and computing capacity. Many entrepreneurs do not yet consider EO images and environmental information a commodity, and incorporating environmental information in economic assessment is considered largely underdeveloped. Joe Morrison: “The satellite imagery industry still has no idea what customers actually want”.

Wagemann et al. (2021) recognize the following five key challenges to finding, accessing and interoperating Big Earth Data: (1) limited processing capacity on the user side, (2) growing data volumes, (3) non-standardized data formats and dissemination workflows, (4) too many data portals, (5) difficult data discovery. Consequently, Wagemann et al. (2021) propose the following three strategies to deal with the problem:

- target cloud services at intermediate users instead of policy- and decision-makers / avoid over-engineered systems with a high level of abstraction;

- increase capacity-building to decrease the existing gap in cloud skills and uptake;

- provide a cloud certification mechanism to increase overall trust in cloud-based services.

So, although now more than ever EO has caught the attention of the public interest, there are still barriers to making this technology accessible and easy to use: why are EO technology and environmental information still limited only to those with highly developed technical skills? Which platform to use to generate and share environmental data — is Google Earth Engine the only cloud service we need for EO data? How can we make monitoring systems that explain the effects of global warming on land cover changes and extreme weather (floods, droughts etc.) as simple to use and as popular as Google Maps or AccuWeather?

On July 19th 2022 OpenGeoHub foundation together with Wageningen University organized a public workshop entitled ‘Innovative governance, environmental observations and digital solutions in support of the European Green Deal’. This is the first of a series of interactive meetings within the Horizon-Europe Open-Earth-Monitor project that aims at bringing together Earth Observation and Data experts from business, policy and academia with the community of practice, and connect with potential end-users of the future platforms developed by the project.

Each of the talks was video recorded and is available here alongside the main takeaways from the discussions.

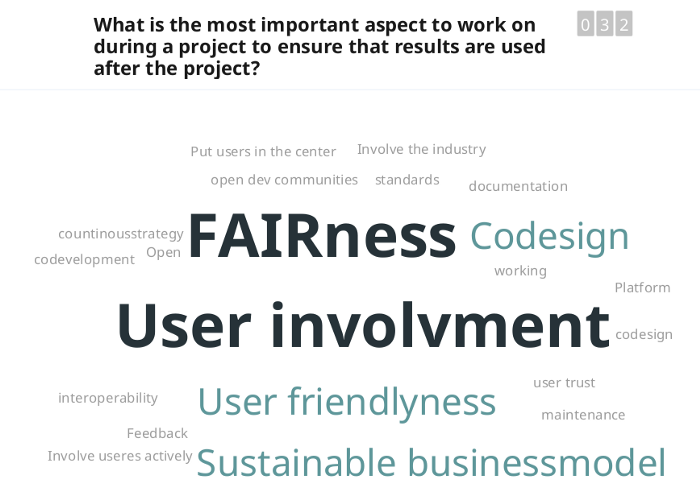

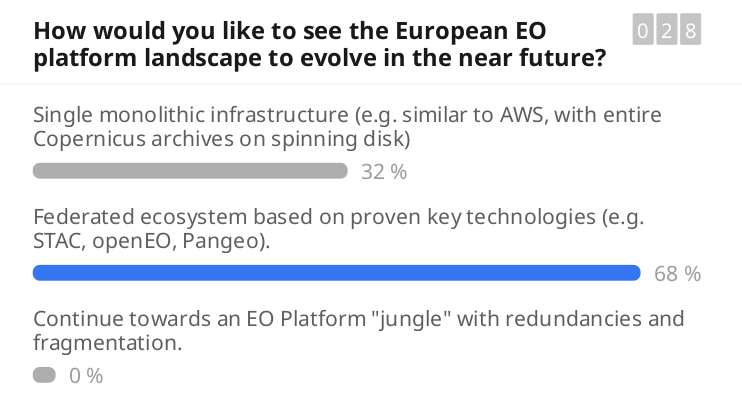

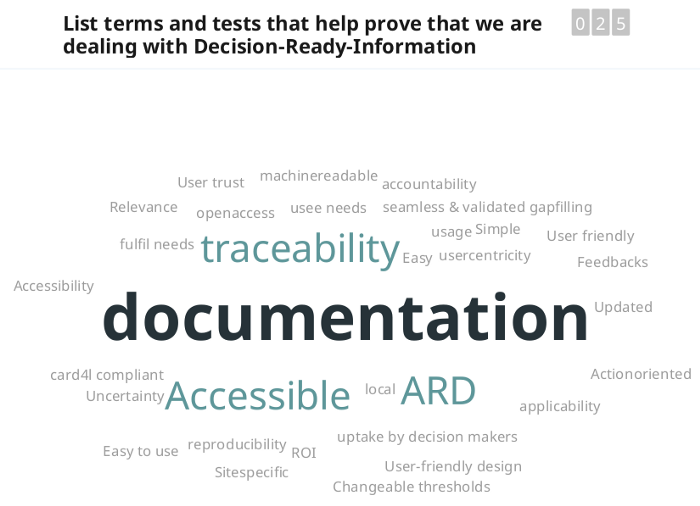

To collect opinions from the audience we used Slido.com with its word clouds (see above) and voting tools. We focus here on the 10 main takeaways (subjectively chosen, please feel free to highlight your own takeaways!) that came out from the keynotes and that have been further discussed at the meeting.

#1 Tracking impacts of environmental investments is not trivial

For Joanna Ruiter of the Netherlands Space Office (NSO), one of the key problems of the projects funded by the NSO and the Dutch Government is that it is relatively easy to assign funds to organizations, but relatively difficult to track the effects of funds spent, especially in the terms of impact on the ground.

Ruiter summarized the NSO’s long-term strategy and recent national programmes, with special attention to Geodata For Agriculture and Water (G4AW; EO technology to support food security and climate resilience) programme, which includes 25 projects and innovations in 15 developing countries. In her presentation, Joanna emphasized a gap in accessibility to the many available technologies due to a lack of financing mechanisms, and the fact that new tools and EO information must be accompanied by financial services in order to have an impact in low- and middle-income countries. But the most important takeaway from her talk seems to be that there is a missing solution to efficiently track environmental investments and related impacts i.e. to make sure that funds are spent efficiently.

#2 Overlap in pre-processing of EO data is huge (and highly inefficient)

Patrick Griffiths of the European Space Agency in his talk “EO Platforms and Open Science in Support of Green Deal Ambitions” mentioned some of the key challenges of the EO field, for example, the data management burden affecting scientists, particularly in the EO space, who spend 80–90% of their time ‘cleaning’ data.

The scientific community could address this burden by the simplification and democratization of processes, more collaboration and sharing of data and technologies, and reducing fragmentation and redundancy of platforms; for instance, a few projects are opening up novel solutions: the EuroData Cube, OpenEO Platform and OpenEO API (Schramm et al., 2021). But in essence, enormous budgets are still spent on overlapping data cleaning tasks and overlapping functionality, and this is obviously inefficient.

#4 Achieving Land Degradation Neutrality requires data co-design at every stage in decision processes

Patrick Griffiths of the European Space

Barron Orr, Lead Scientist at the UN Convention to Combat Desertification introduced the data and governance gaps in the context of achieving Land Degradation Neutrality (LDN), SDG target nr 15.3. 126 countries have pledged (or at least aim) at halting the rapid land degradation caused by damaging land uses, to ensure food security and healthy ecosystems.

In general, EO, geospatial data, and derived information play insightful roles in monitoring the SDGs targets, planning, tracking progress, and helping nations and stakeholders make informed decisions, plans, and ongoing adjustments that will contribute toward achieving the SDGs. Orr described how data, and especially open data, is critical in achieving LDN since it broadens our understanding of the underlying land potential for any land use decision: for this reason data “must be used at every stage in decision processes, crucially at the beginning in the design process, rather than only for monitoring projects already in progress” concluded the UN scientist.

#3 European EO platforms suffer from redundancies and fragmentation

Patrick also concluded in his talk that “European EO platforms suffer from redundancies and fragmentation”. The European Commission, over the last decade, has invested significantly in EO and projects to channel EO technology into practice including commercial applications, but this has also resulted in high redundancies and many too small applications to survive the market needs. According to Patrick, what could be more efficient if we would “stop reinventing the wheel” and focus on collaboratively building blocks that can be easily combined and are de-centralized. This seems to match also the feedback received from the audience:

#5 Using EO data only for decisions is too risky: quality ground data helps improve EO and vice versa

Gert-Jan Nabuurs of Wageningen University and Research discussed the challenges and opportunities when better connecting the European Ground Based Forest Inventories with EO Data. With his 27 years of experience gathering ground-based data, Nabuurs recalls how our global data collection and sharing capabilities have come a long way since the beginning of his career (where he ‘had to call government agencies by phone and request floppy disks in the mail.’)

The key takeaway from his talk: using EO data for decision making in forest inventories and planning is too risky! This is illustrated with the example of Ceccherini et al. (2020) where abrupt harvested areas detected in Finland and Sweden were due to changes in the Landsat missions and issues in the Global Forest Watch product, and not as much due to increased harvesting.

In summary, EO-based monitoring projects should always be accompanied by a rigorous collection of field observations and appropriate statistical estimates as stated by Breidenbach et al., (2022) and also confirmed in Ceccherini et al., (2022).

The expansion of EO will require increased availability of reference surface data. Yet a number of barriers still prevent National Forest Inventories (NFI) data and similar from reaching the public eyes (despite being dominantly publicly funded). Nabuurs pointed to some promising new approaches, like the EFISCEN model for projecting forest resources under different scenarios, improved Landsat algorithms, and open source data policies throughout Europe, that are taking steps towards improving reliability and transparency in the sector (Nabuurs et al., 2022).

Another keynote, Matt Hansen, the Co-Director at Global Land Analysis and Discovery (GLAD) lab, spoke on Global Land Cover and Land Use Monitoring and likewise emphasized that it is the primary concern for global mapping projects to have high-quality ground data to ensure unbiased estimators. No map is unbiased and no map is perfect: known and quantified limitations should be published together with the produced map.

#6 FAIR-TRUST-CARE data principles can help bridge the digital divide

Yana Gevorgyan, Secretariat Director, introduced the Group on Earth Observations (GEO), the global network connecting government institutions, academic and research institutions, data providers, businesses, engineers, scientists and experts to create innovative solutions to global challenges based on open EO.

On top of GEO’s agenda are the actions aiming at lowering the barriers for people and groups to access authoritative information, especially from countries with less access to EO data, by promoting and implementing FAIR-TRUST-CARE data principles:

– F.A.I.R.: Findable, Accessible, Interoperable, Reusable; The first step in (re)using data is to find them: metadata and data should be easy to find for both humans and computers. Once the user finds the required data, it is necessary to know how it can be accessed, possibly including authentication and authorisation. The data usually need to be integrated with other data. In addition, the data need to interoperate with applications or workflows for analysis, storage, and processing.

–T.R.U.S.T.: Transparency, Responsibility, User focus, Sustainability and Technology; the TRUST Principles provide a common framework to facilitate discussion and implementation of best practices in digital preservation by all stakeholders.

-The ‘CARE Principles for Indigenous Data Governance’: Collective Benefit, Authority to Control, Responsibility, and Ethics; the CARE principles are people and purpose-oriented, reflecting the crucial role of data in advancing Indigenous innovation and self-determination. These principles complement the existing FAIR principles encouraging open and other data movements to consider both people and purpose in their advocacy and pursuits.

These general principles have an important role to play in helping bridge the digital divide between the most developed and developing nations. Gevorgyan further discussed some of the actions GEO is taking to put these principles into action, such as working with the Open Earth Alliance, to support global sustainability (and understanding) through the use of open technology solutions, and supporting agencies that offer credits for cloud technologies to low and middle-income countries.

#7 Global and local EO products nominally offering the same, can be significantly different

Gilberto Câmara, former director of National Institute of Space Research in Brazil and former director of the GEO Secretariat, explored the challenges of mapping Land Use and Land Cover, highlighting the necessity of having self-consistent maps and consistent definitions of terms, like deforestation, in order to create products that are both globally trustworthy and locally relevant. Just think of the subtle visual difference between a natural and an artificial landscape, like a savanna and a cattle pasture. Mixing or missing out on such classes in the EO product can totally change its usability and applicability.

Gilberto illustrated how EO tools can fall short of being useful to policymakers at the local level when various end-users are not empowered in the development process through what he referred to as “bottom-up map production”. Mr Câmara pointed to the R-based sits library as an example of a commercially ready cloud services tool created with accessibility for end-users at its heart.

#8 Mapping land cover and similar general classes will stay important even though we can today also map detailed continuous variables

During the discussion session, Patrick Griffiths and Tom Hengl asked Gilberto if there is still a need for land cover maps and similar general EO products when we can now also map various continuous variables e.g. NDVI, FPAR, tree species percentage, crop types, canopy height, etc which basically represent land cover but at quantitative scales and in multivariate space, thus in much higher detail. Gilberto believes that land cover maps will remain in use because we need simple explanations of common features that people can interpret and relate to quickly: “especially the policymakers — they need something that they can relate with”.

With the number of new global 10-m spatial resolution land cover products (e.g. Venter et al., 2022) appearing recently, we have no doubt that land cover mapping will remain a vibrant field of research.

#9 There is always a creative way to ensure access to basic environmental ground-truth data that can then help extend research

In the talk by Gert-Jan Nabuurs we looked specifically at problems of accessing the (at least for data mining purposes) e.g. NFI Forest inventory data, which is currently kept private by national organizations and hence is not available to the majority of the research community. But there are many creative ways to access this data. Participants have mentioned strategies such as building trust — signing collaboration agreements or offering joint products that benefit both sides. The alternative is to put pressure on the governments to require that all publicly funded data is eventually released and that providing FAIR datasets should be a requirement for publication or even promotion at work.

In the recent paper by Sabatini et al., (2022), for example, authors have produced maps of vascular plant alpha diversity using the sPlot database. These data are only available upon request by submitting a project proposal to sPlot’s Steering Committee, hence the results, in principle, can neither be reproduced nor extended. Without going into reasons why this data is not available publicly (and which is often not to authors to decide), this is just one of many examples of highest quality environmental data being under embargo and research results not being FAIR.

#10 EO industry needs applications that their customers use to take action and solve problems… on a daily basis

Going back to the topic from the start of this article, in one of his (now famous) blogs, Joe Morrison (“the Controversial industry figure”) questions the sustainability of many modern EO businesses: “lots of new analytics startups are pursuing the dangerously seductive strategy of building a new type of dataset and then closing their eyes and hoping customers will magically show up to start buying it”. Successful businesses should instead “build applications that their customers use to take actions and solve problems. If you use an application every day, you don’t mind paying a subscription for it”. Likewise, we also recognize in our work that EO-based services need to serve (as much as possible) Decision-Ready, relevant and easy-to-use information. As with many modern democratic digital systems, usability and usage (web traffic) will be our final judge of success.

Open-Earth-Monitor project

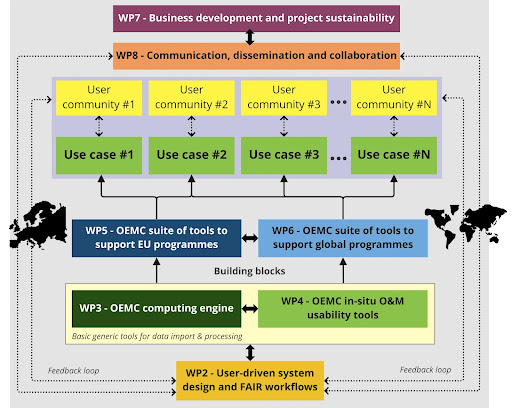

To support democratic and efficient implementation of the European Green deal (and avoid profound geopolitical turbulence’s and the uncertainties brought by climate change), OpenGeoHub, together with 21 partners has launched an ambitious new European Commission-funded Horizon Europe project called “Open-Earth-Monitor” and which aims at tackling the bottlenecks in the uptake and effective usage of environmental data, both ground-observations and measurements and EO data.

The project’s main aim is to directly support European and Global sustainability frameworks, such as the ambitious European Green Deal, the European Digital Transition, the EuroGEO (Europe’s part of the Group on Earth Observations) and overall the United Nations Sustainable Development Goals (SDGs) by producing and integrating a range of open-source, data-based and user-friendly tools monitoring European and global natural resources in support of decision-making and actions on the ground.

In a nutshell, over the next 4 years, the consortium will be: (1) building a cloud-based, open-source EO computing engine integrating Earth Observations with field data from pilot cases, (2) releasing FAIR data portals that seamlessly integrate existing and novel European data with global ones, (3) while involving end-users, decision-makers, landholders, SMEs and academia — throughout the design process of the tools and testing applications. Ultimately, the tools generated by the Open-Earth-Monitor cyberinfrastructure aim to contribute to real-world objectives and initiatives by generating use cases for testing.

The Open-Earth-Monitor Cyberinfrastructure project has received funding from the European Union’s Horizon Europe research and innovation programme under grant agreement № 101059548.

Note: this article was originally published on: https://opengeohub.medium.com/earth-observation-and-machine-learning-as-the-key-technologies-to-track-implementation-of-the-green-62ab22e9e1a8